[Guest Post] Automating Data Transparency with AI: A Framework for Detecting Data Availability Statements

— by Joshua Wong, Anson Cheung

Note: HKU Libraries is committed to fostering the next generation of researchers and advancing open science. In our recent collaboration with the Bachelor of Arts and Sciences in Social Data Science (BASc(SDS)) programme, where students tackle real-world challenges in their Final Year Projects, two groups of students explored automatic detection of Data Availability Statements (DAS). This is the first post in the two-part series where the students share their exploration and findings. Today’s post is by Joshua Wong and Anson Cheung. Joshua and Anson were both students in the Bachelor of Arts and Sciences in Social Data Science programme at the University of Hong Kong in 2024-2025.

The push for open science means researchers are paying more attention to transparency, reproducibility, and data access. One important practice? Adding Data Availability Statements (DAS) to published papers.

As part of our Final Year Project (FYP) in the BASc in Social Data Science (BASc(SDS)) programme, we developed a scalable, AI-powered framework to detect and evaluate DASs in full-text journal articles automatically. Our BASc(SDS) programme has an initiative to partner with industry collaborators, which enables students to tackle real-world challenges in their Final Year Projects (FYP). This year, we were thrilled to take on the DAS project proposed by HKU Libraries—an exciting opportunity to apply our acquired knowledge and skills to a meaningful problem with real-world impact.

This project matters because reliable access to research data underpins transparency, integrity, and reproducibility (Wilkinson et al., 2016). Monitoring DAS practices at scale helps universities and funders assess the impact of data-sharing policies and identify gaps in compliance. Our tool directly supports the goals of HKU’s open science initiatives and the Research Grants Council (RGC) ‘s 2020 Open Access Plan (Research Grants Council, 2021).

Our Approach: Cost-Effective Automation at Scale

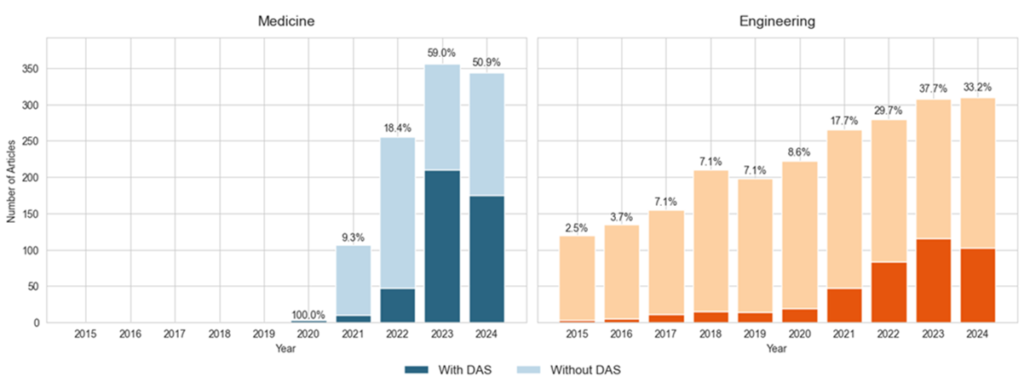

We analyzed 7,301 HKU-authored journal articles in medicine and engineering (2015–2024). These two disciplines were selected due to their high publication output and contrasting data-sharing norms — medical research often cites privacy or ethical restrictions, whereas engineering is more likely to utilize repositories such as GitHub (Mallasvik & Martins, 2021; Suhr et al., 2020).

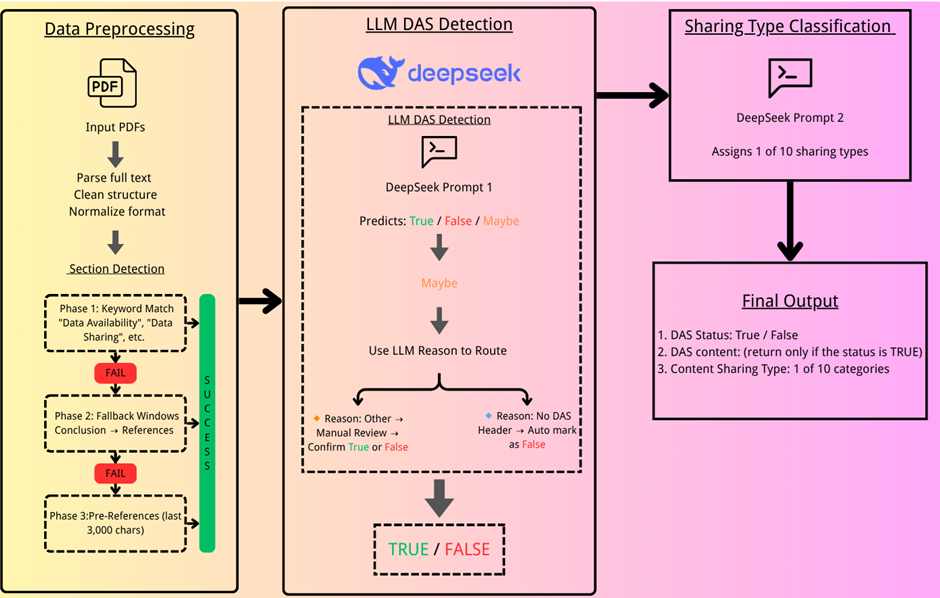

Our proposed solution:

- Text Extraction using PyMuPDF to parse full-text PDFs.

- Candidate Section Detection based on keywords and structural heuristics.

- LLM Classification using DeepSeek-V3-0324, chosen for its high accuracy and 95× lower cost than GPT-4 Turbo, (DocsBot AI, 2025).

- Manual Review of ambiguous cases for final validation.

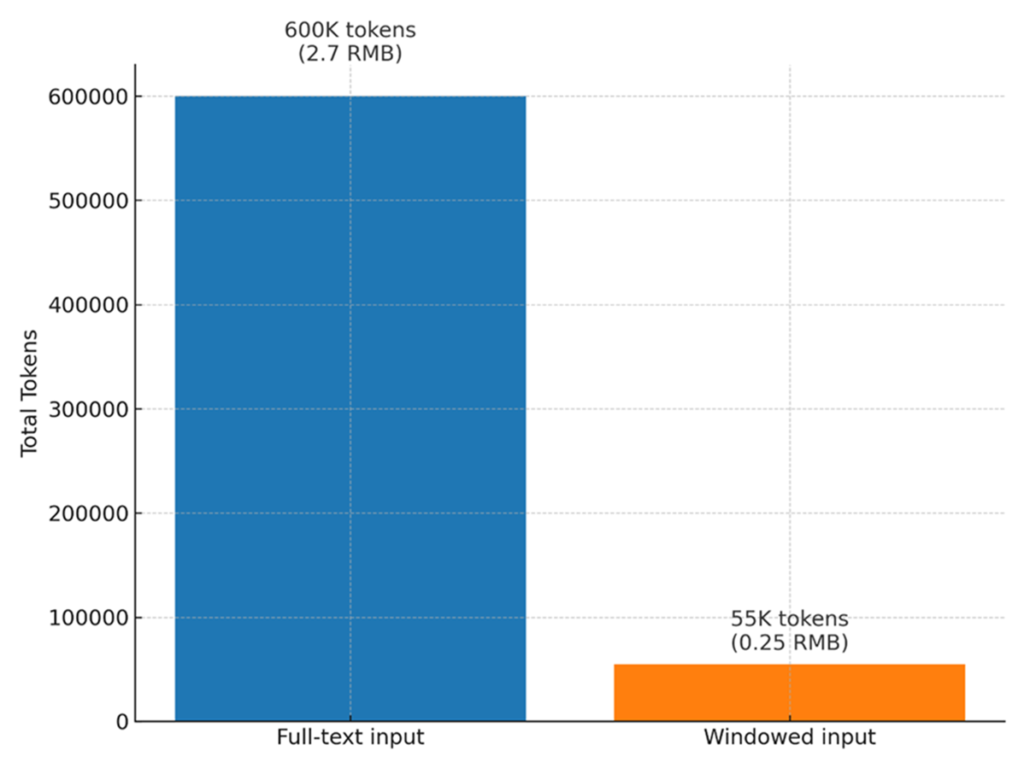

This modular workflow achieved a Boolean accuracy of 99.6%, with macro F1 scores exceeding 0.97. By processing only narrow text windows, we reduced token costs by over 90% without sacrificing performance.

Key Findings: Progress and Pitfalls in Data Sharing

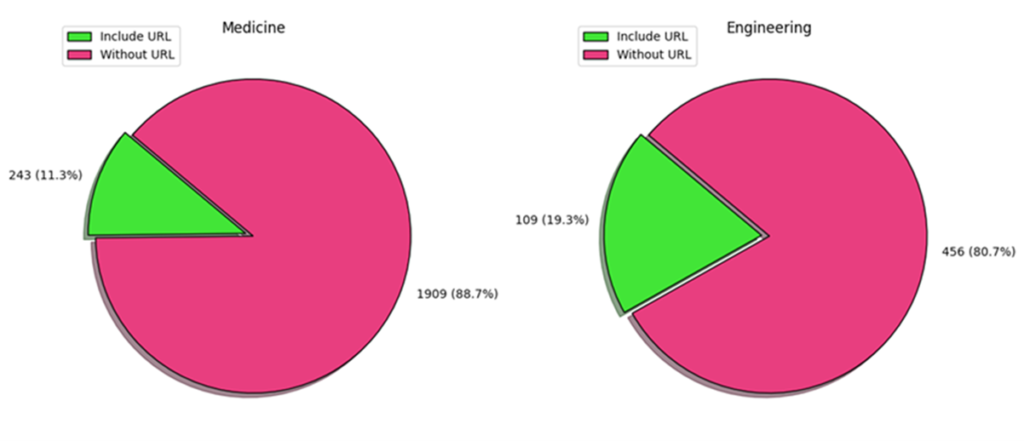

Our analysis of 7,301 HKU articles (2015–2024) reveals both progress and persistent challenges in data sharing practices. DAS adoption shows significant disciplinary divergence: between 2021 and 2024, 53.9% of medical papers included these statements, compared to just 17.1% in engineering. However, the presence of a DAS does not guarantee functional accessibility—only 11.3% of medical and 19.3% of engineering statements provided repository links or DOIs, while over 62% used generic “data available upon request” phrasing.

Understanding DAS as signposts reveals important nuances: the sampled medical studies frequently justified restricted access due to legitimate ethical constraints such as patient privacy. In contrast, among the sampled engineering papers, only 19% included repository links despite minimal sensitivity concerns. Tedersoo et al. (2021) found that 78% of “upon request” statements showed no evidence of actual data sharing when independently verified (Tedersoo et al., 2021), highlighting how DAS execution often falls short of its intended signposting function.

The poster of the project can be found at the HKU Data Hub: https://doi.org/10.25442/hku.29324351.

Conclusion: A Cross-Disciplinary FYP for Open Science

This project showcases how our BASc(SDS) training connects theory with real-world impact through AI-driven DAS analysis and open science. This FYP offers us the opportunity to bridge classroom learning with practical application, enabling us to better understand the skills relevant to today’s workforce. By leveraging AI for large-scale DAS analysis, we contribute not only to our academic growth but also to advancing the empirical understanding of open science practices.

We extend our gratitude to Professor Shihui Feng for academic guidance, Dr Man Fung Lo for programme support, and HKU Libraries colleagues Tina Yang, Wilson Tang, and Zesen Gao for their invaluable collaboration.

References

DocsBot AI. (2025, March 12). DeepSeek-V3 vs GPT-4 Turbo: Token cost comparison. DocsBot AI. https://docsbot.ai/models/compare/deepseek-v3/gpt-4-turbo#price-comparison

Gabelica, M., Bojčić, R., & Puljak, L. (2022). Many researchers were not compliant with their published data sharing statement: a mixed-methods study. Journal of Clinical Epidemiology, 150, 33-41. https://doi.org/10.1016/j.jclinepi.2022.05.019

Mallasvik, M. L., & Martins, J. T. (2021). Research data-sharing behaviour of engineering researchers in Norway and the UK: Uncovering the double face of Janus. Journal of Documentation, 77(2), 576–593. https://doi.org/10.1108/JD-08-2020-0135

Research Grants Council. (2021, January). Open access plan of the Research Grants Council. https://www.ugc.edu.hk/doc/eng/ugc/publication/report/report20210106/report20210106.pdf

Suhr, B., Dungl, J., Stocker, A., & Lepidi, M. (2020). Search, reuse and sharing of research data in materials science and engineering—A qualitative interview study. PLOS ONE, 15(9), e0239216. https://doi.org/10.1371/journal.pone.0239216

Tedersoo, L., Küngas, R., Oras, E., Köster, K., Eenmaa, H., Leijen, Ä., … & Sepp, T. (2021). Data sharing practices and data availability upon request differ across scientific disciplines. Scientific data, 8(1), 192. https://doi.org/10.1038/s41597-021-00981-0

Wilkinson, M. D., Dumontier, M., Aalbersberg, I. J., Appleton, G., Axton, M., Baak, A., … & Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data, 3(1), 1–9. https://doi.org/10.1038/sdata.2016.18